50 Best Companies to Watch 2023

CIO Bulletin

AI-driven data solutions help companies improve efficiency by automating tasks, gain insights into customer behavior, market trends, and operational inefficiencies, make proactive decisions, personalize products and services, and gain a competitive advantage. These solutions help unlock the full potential of data assets, drive informed decision-making, and improve profitability. BigTapp Analytics is a leading big data analytics and AI-driven platform that provides next-generation data solutions for businesses across industries. The company leverages the power of cutting-edge technologies such as machine learning, big data technologies, and artificial intelligence to help businesses gain insights into customer behaviors, market trends, and operational inefficiencies, among others. With its advanced analytics capabilities, BigTapp Analytics helps businesses make informed decisions and improve the overall performance. The company's product offerings include AI-driven data profiling, data curation, feature engineering, and predictive analytics, among others.

BigTapp Analytics is committed to providing its customers with exceptional services that meet the unique requirements and help to unlock the full potential of the data assets. The platform's robust capabilities and dedication to customer satisfaction have earned it a reputation as a reliable and innovative big data analytics partner. Analytical Blueprinting and Data Engineering are two core services that are offered by companies like BigTapp Analytics to organizations of all sizes looking to leverage the power of big data to drive business value. Analytical Blueprinting involves the development of a comprehensive framework that helps businesses capture and analyze critical data effectively to gain insights into customer behavior, market trends, and operational inefficiencies. On the other hand, Data Engineering involves the design and implementation of a robust and scalable infrastructure to capture, store, manage, and manipulate large volumes of data.

Analytical Blueprinting

Analytical Blueprinting is a process that involves the identification of key data-driven objectives, KPIs, and performance metrics, as well as the design of comprehensive data architecture to support these objectives. The goal of analytical blueprinting is to help businesses develop a clear and well-planned roadmap for leveraging the data assets to achieve the business objectives. The process of analytical blueprinting typically starts by identifying the current state of the organization's data architecture and assessing its strengths, weaknesses, and gaps. This assessment includes an evaluation of the existing data sources, the data quality, and the data management practices in place, and the ability to support the organization's business requirements.

Based on this assessment, the analytical blueprinting team identifies the key objectives and the KPIs that need to be set to achieve these objectives. This requires a deep understanding of the business and its processes in various functional areas. The KPIs need to be specific, measurable, achievable, relevant, and time-bound (SMART).Once the objectives and KPIs have been identified, the next step in the analytical blueprinting process is to design the data architecture to support the KPIs. The data architecture must be capable of supporting the data collection, storage, and processing requirements needed to generate meaningful insights that can be used to make informed business decisions.

Data Engineering

Data engineering is the process of designing and implementing a data architecture to support the management, organization, and processing of large volumes of structured and unstructured data. Data engineering is a critical function for organizations that need to manage and utilize large volumes of data, which may be sourced from various applications, databases, file systems, and storage systems. Data engineering involves several steps, including data collection, data ingestion, data cleaning, data transformation, and data storage. Each of these steps requires different technologies, tools, and processes to be utilized. Data collection is the process of gathering data from various sources and storing it in a centralized location. The sources of data can be diverse and can include sensors, web logs, application data sources, social media feeds, and many others.

Data ingestion is the process of loading data from the sources into the data processing environment or infrastructure. In some cases, this may require real-time or near real-time processing of data streams. Data cleaning is the process of removing data that is incomplete, irrelevant, or inconsistent from the data set. This involves the use of algorithms and other techniques to identify and eliminate data that may be corrupted, duplicated, or incorrectly formatted. Data transformation is the process of converting raw data into a usable format that can be analyzed and interpreted. This may involve data aggregation, data Data storage is the process of storing the data in a format that is easily accessible and can be analyzed. The storage technology used may vary based on the volume and type of data. It may include databases, data lakes, cloud storage, or other storage technologies.

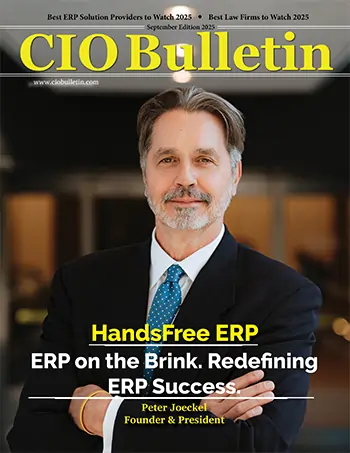

Meet the leader behind the success of BigTapp

Venkata Narayanan, CEO of BigTapp, firmly believes in using Insights based on data to drive decision-making at all levels of the organization. This belief guided his professional and entrepreneurial journey, including launching one of the earliest proponents of analytics in Singapore in 1997, Knowledge Dynamics. Venkat is also a passionate educator, teaching analytics in IHLs and corporate settings.

Insurance and capital markets