Home technology it-services google improves search results with BERT

It Services

CIO Bulletin

2019-10-28

Google has introduced a core change in its search algorithm which can improve the rankings of results for more than one in ten queries. Google makes use of an advanced technology known as natural language processing (NLP) technique to better understand the intentions behind the queries.

Google’s search algorithm now has a better understanding of how words correlate to each other in a sentence. This latest technology is supposed to be implemented in a course of 10 months according to the IT services giant.

The latest update can be considered critical because it affects almost 10% of the overall searches. The update also promotes conversational queries because it can easily interpret a full-sentence instead of a sequence of keywords.

“This is the single biggest ... most positive change we've had in the last five years and perhaps one of the biggest since the beginning,” says Pandu Nayak, Google VP of research.

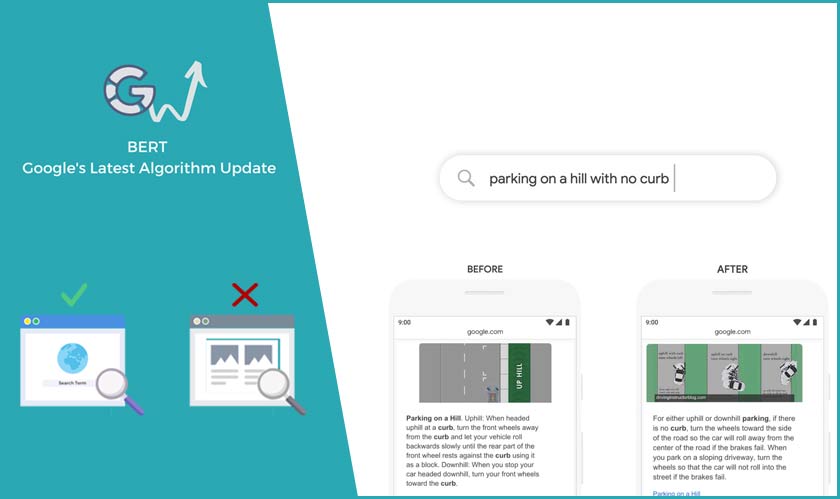

The recent algorithm is based on BERT - an acronym for “Bidirectional Encoder Representations from Transformers” which simply means that instead of treating a sentence as a bag of words, it analyses all the words in a given sentence as a whole.

BERT understands the need to look closely at words and it does this by self-learning. Google trained BERT by giving a set of sentences and randomly removed 15 percent of the words. BERT is then assigned to the task of figuring out what those words ought to be. And this training has been remarkably effective with the making of the NLP model to “understand” the context.

Google quoted an example of the sentence “parking on a hill with no curb.” In this sentence, “no” is key to the query and before implementing BERT, the search algorithms missed that.

Digital-marketing

Artificial-intelligence

Lifestyle-and-fashion

Food-and-beverage

Travel-and-hospitality